Hyperflex Solutions & Best Practices

The Elastic Stack, with its powerful capabilities in search, data analysis, and visualization, is transforming how businesses manage and utilize data. At Hyperflex, we help organizations optimize their Elastic environments through expert consulting tailored to meet unique challenges and goals.

From building search applications to enhancing Elastic Stack performance, our team ensures every solution is designed to deliver measurable results. Stay tuned for best practices and insights to help you make the most of your Elastic investment.

How to Use Elastic Agent Fleet for Real-Time Security Monitoring (Step-by-Step)

A step-by-step guide to deploying Elastic Agent with Fleet for real-time security monitoring. Learn Fleet architecture, endpoint protection with Elastic Defend, alerting, automations, and best practices from Hyperflex engineers.

Introduction

Security teams today operate in a world of continuous risk. Every endpoint, every container, every system log could hide the next threat.

That’s why Elastic Agent Fleet has become a cornerstone of modern security operations - unifying endpoint protection, log collection, and telemetry monitoring under one management plane.

Instead of manually configuring Beats on every host, Fleet lets you centrally manage hundreds of Elastic Agents from a single interface - complete with policies, integrations, and live health status.

At Hyperflex, we help enterprise and defense clients implement Elastic Security for mission-critical workloads. Our engineers optimize Fleet deployment for high-security networks, ensuring compliance, speed, and resilience.

Before You Start: Prerequisites & Requirements

Before deploying Fleet, confirm you have:

- Elastic Stack Version: 8.x or higher

- License: Basic license works, but Elastic Security requires Platinum or Enterprise

- Installed Components: Elasticsearch, Kibana, Fleet Server

- Network: Agents must reach Fleet Server on port 8220

Additional checklist:

- Admin rights on Fleet Server host

- Certificates configured (for TLS)

- Outbound access to Elasticsearch and Kibana

Fleet supports Linux, Windows, and macOS - allowing hybrid or fully on-prem coverage.

Fleet Architecture Overview

Fleet introduces a hub-and-spoke model for agent management:

- Fleet Server: The command and control node that manages agent enrollment, policies, and status.

- Elastic Agent: Deployed on endpoints to collect logs, metrics, and security data.

- Elasticsearch: The storage and analytics layer for ingested events.

- Kibana: The management and visualization interface.

Data flow overview:

- Elastic Agent collects logs and telemetry from endpoints.

- Data is sent securely to Fleet Server.

- Fleet Server forwards it to Elasticsearch.

- Kibana displays dashboards, alerts, and rule-based detections.

💡 Think of Fleet Server as your mission control - coordinating agents, updates, and configurations across your entire organization.

Step 1: Install and Enroll Elastic Agent

Download and Extract Agent

Enroll the Agent

In Kibana, generate an enrollment token:

Fleet → Agents → Enrollment Tokens → Create Token

Then run:

After successful enrollment, the agent appears in Kibana → Fleet → Agents.

Step 2: Connect Agents to Fleet Server

Fleet Server centralizes policy management and requires secure communication with Elasticsearch.

Run the following on your Fleet Server host:

Verify the service:

sudo systemctl status elastic-agent

Output should include Active: active (running).

Step 3: Add Security Integrations

Navigate to Kibana → Integrations and install key packages:

- Elastic Defend — Endpoint protection and detection

- Auditd Logs — Linux audit framework

- Windows Security Logs — Event logs from Windows hosts

Create a new Agent Policy (e.g., security-policy-prod) and assign the integrations.

Sample YAML Policy (elastic-agent.yml)

This policy applies preventive protection on Windows and detection on Linux for controlled coverage.

Step 4: Monitor in Kibana Security App

Open Kibana → Security → Dashboards to access real-time views.

You’ll see:

- Endpoint Alerts — Active detections

- Host Overview — CPU, process, and memory health

- Detection Rules — Elastic’s prebuilt rule engine

Example query to view alerts:

event.module : "endpoint" and event.kind : "alert"

This enables fast triage and validation across endpoints.

💡 Hyperflex Insight

Hyperflex helps enterprises deploy and secure Elastic Agent Fleets at scale.

From architecture design to certificate automation, our consulting team ensures continuous security visibility and uptime for regulated industries.

Step 5: Create Alerts and Automations

1. Use Prebuilt Rules

Go to Security → Rules → Load Elastic Prebuilt Rules

Enable sets such as:

- Privilege Escalation

- Malware Prevention

Persistence Mechanisms

2. Custom Rule Example

Alert when PowerShell spawns under a suspicious parent process:

process.parent.name : "winword.exe" and process.name : "powershell.exe"

Add email or webhook actions directly from the rule builder.

Elastic Defend Deep Dive

Elastic Defend replaces multiple endpoint agents with a single unified integration.

Key capabilities:

- Malware Prevention: Blocks known threats via built-in ML models.

- Behavioral Detection: Tracks process ancestry and suspicious activity.

- Memory Threat Protection: Identifies in-memory exploits and injections.

- Ransomware Protection: Prevents file encryption events in real time.

Configuration tip:

To enable ransomware protection via YAML, add:

Elastic Defend events flow into the logs-endpoint.alerts-* index, visible under Security → Alerts.

Automating Incident Response via Webhook

For modern SOCs, automation shortens mean time to respond (MTTR). Elastic Security allows native webhook integrations to send alerts to Slack, Teams, or SOAR systems.

Example: Webhook Action

- Go to Stack Management → Rules and Connectors → Create Connector

- Choose Webhook

Provide endpoint details:

- Attach this connector to your Security rules.

Now, every time a detection fires, your webhook can automatically notify an analyst or trigger a remediation workflow.

Best Practices for Secure Fleet Management

- TLS First: Always use HTTPS for agent–server communication.

- Token Hygiene: Rotate enrollment tokens monthly.

- Role-Based Policies: Separate production, staging, and sandbox fleets.

- Monitor Fleet Health: Use Kibana → Fleet → Agents → Status for uptime metrics.

- Upgrade Gradually: Test Fleet and Elastic Defend updates on non-prod hosts first.

Central Dashboards: Manage security KPIs (alert volume, response time) in Kibana.

Troubleshooting Common Issues

.png)

Final Takeaway

Elastic Agent Fleet isn’t just about convenience - it’s a shift toward centralized, real-time defense. By bringing endpoint data, rules, and automation together, security teams can act faster and reduce blind spots across complex infrastructures.

For on-prem deployments, Fleet is the control tower that connects detection to response.

But to achieve true reliability, you need proper architecture, security hardening, and scaling strategy - areas where Hyperflex’s Elastic Consulting Services excel.

Hyperflex helps teams scale Elastic fast - with confidence.

From on-prem to hybrid cloud, we deliver optimized Elastic Security environments built for performance and compliance.

Contact us at marketing@hyperflex.co to explore how we can support your Elastic journey.

Written by Vishal Rathod, Senior Elastic Engineer at Hyperflex

.png)

Upgrading Elasticsearch from 6.8 to 8.x: A Real-World Modernization Blueprint for Enterprise Search & Observability

A real-world enterprise guide to upgrading Elasticsearch from 6.8 to 8.x. Learn how to modernize sharding, fix performance bottlenecks, implement RBAC and ILM, and prepare safely for Elastic Cloud migration—without downtime surprises.

1. Why Elasticsearch 6.8 → 8.x matters today

Elasticsearch 6.8 represents an older generation of the Elastic Stack, before many of the improvements that now define Elastic’s performance, security, and reliability standards.

Upgrading to Elastic 8.x enables:

- Built-in security by default

- Faster, smarter indexing

- Better search performance and ES|QL readiness

- Modern APIs replacing legacy behaviors

- Support for new features (ILM, ECS, vector search, async queries)

- Native compatibility with Elastic Cloud

For teams running security analytics, healthcare workloads, high-volume observability pipelines, or regulated environments, staying on 6.8 introduces long-term risks that keep growing.

This is why an Elastic + Elasticsearch upgrade is not a luxury, it's a strategic move.

2. The modernization challenges enterprises face

Most companies stuck on 6.8 didn’t choose to stay there. Their system evolved, their data grew, and they were busy keeping the lights on.

Over time, technical debt accumulates:

- Old mapping structures rigidly tied to 6.x indexing rules

- Index templates misaligned with modern ECS conventions

- Shards created years ago with no capacity planning

- Heavy aggregations slowing down essential dashboards

- Disconnected node responsibilities that overload CPU

- No RBAC or Spaces to isolate access

- Index bloat due to missing ILM policies

- Limited visibility into cluster health

These issues don’t disappear during an upgrade, they get exposed. A successful project has to fix them at the same time.

3. A real-world upgrade: modernizing a healthcare data platform

A large healthcare platform running sensitive clinical and patient workloads approached our team after years on Elasticsearch 6.8.

They were dealing with:

- Painfully slow queries

- Dashboards timing out

- CPU spikes across hot nodes

- No ILM policies

- No RBAC structure

- Compliance pressure to modernize security and access control

They wanted a clean path from 6.8 → 8.x, and eventually onto Elastic Cloud.

We handled Phase 1: redesigning, optimizing, and securing the environment so the upgrade would be safe and predictable.

This project is a strong reference blueprint for any enterprise approaching a similar upgrade.

4. Step 1 — Building the right migration & sharding strategy

An Elasticsearch upgrade begins long before running the actual upgrade commands. If the underlying structure is flawed, upgrading simply amplifies existing problems.

Mapping modernization

Elastic 8.x introduced stricter rules and more efficient mapping behavior.

Legacy 6.x clusters often contain:

- keyword/text fields used incorrectly

- nested fields turning queries into slow monsters

- outdated analyzers

- custom tokenizers that break during migration

We rebuilt the mapping structure to:

- modernize analyzers

- unify field naming

- reduce memory overhead

- align with ECS patterns

- support future schema changes

Sharding redesign

Most legacy clusters suffer from either oversharding (too many small shards) or undersharding (massive single shards).

We designed a sharding strategy based on:

- growth trends

- ingestion volume

- query patterns

- retention lifecycle

- warm/cold data tiering

This improved reliability and CPU efficiency immediately.

Upgrade simulation

Before touching production, we ran:

- snapshot restore tests

- reindex pipelines

- analyzer compatibility checks

- pipeline dry runs

- rolling upgrade scenario tests

A safe upgrade is built on simulation, not hope.

5. Step 2 — Eliminating performance bottlenecks at scale

Performance problems don’t fix themselves during an upgrade. They get worse.

Through profiling, node monitoring, and workload analysis, we uncovered:

- inefficient filters

- aggregations scanning excessive data

- heavy nested queries

- thread pool exhaustion

- high GC pressure

- unbalanced node responsibilities

What we fixed

- Rewrote high-impact queries

- Introduced caching strategies

- Split ingest-heavy nodes from search-heavy ones

- Tuned heap usage and fielddata

- Implemented hot/warm separation

- Reduced CPU churn from unnecessary refresh cycles

By the time tuning was completed, latency dropped significantly—and the cluster became stable enough to handle an 8.x upgrade.

6. Step 3 — Strengthening security with RBAC & Kibana Spaces

For any organization handling regulated or sensitive data, security is not optional.

Elastic 6.8 lacked the built-in security defaults that now come enabled in 8.x. We established a modern security foundation:

RBAC (Role-Based Access Control)

Granular roles for:

- platform engineers

- developers

- data analysts

- compliance auditors

RBAC prevented over-privileged access and aligned with regulatory expectations.

Kibana Spaces

Spaces were used to create clean separation:

- dashboards

- visualizations

- ML jobs

- logs and events per team

This reduced risk, improved governance, and greatly simplified audit requirements.

7. Step 4 — Using ILM & Monitoring to fix operational debt

Long-term operations often suffer because nobody had time to build proper lifecycle management.

Index Lifecycle Management (ILM)

We created ILM policies that:

- roll over indices based on size & age

- move warm data to cost-efficient nodes

- freeze older indices

- delete stale data automatically

- prevent oversized shards from forming

Dedicated Monitoring Cluster

A separate monitoring cluster gave:

- early detection of CPU and heap issues

- visibility into long-running queries

- structured SLO tracking

- capacity planning insights

This eliminated years of operational pain in just weeks.

8. Step 5 — Preparing for Elastic Cloud migration

Phase 1 ended with a clean, modernized cluster ready for upgrade into 8.x and then Elastic Cloud.

We prepared for cloud migration by:

- checking API compatibility

- building a snapshot strategy

- mapping on-prem node roles to cloud templates

- designing safe VPC peering + private traffic flows

- preparing for integrations with Elastic Security & Observability

- validating cross-cluster search implications

Going to Elastic Cloud becomes dramatically easier when the on-prem environment is already modern.

9. Common pitfalls during Elasticsearch upgrades

Enterprises often underestimate upgrade complexity. These are the recurring pain points seen across dozens of projects:

1. Not fixing sharding beforehand

Upgrades don’t magically fix bad sharding—they magnify it.

2. Ignoring mapping conflicts

6.x mappings frequently break in 7.x/8.x if not cleaned up.

3. Forgetting ingest pipelines

Older pipelines often contain processors deprecated in newer versions.

4. Not testing snapshot restore

Snapshots restore differently across major versions—testing is mandatory.

5. Skipping rolling upgrade simulation

A real test run exposes node role issues, plugin conflicts, or template problems.

6. Treating security as “post-upgrade work”

Security must be integrated before the upgrade, not after.

7. Believing Elastic Cloud migration is copy-paste

It’s safe only when the source system is stable and modern.

10. Lessons learned from the field

Across all major Elasticsearch upgrades, patterns emerge:

- A clean migration strategy is 50% of the work.

- Most performance issues are caused by non-optimal queries and outdated sharding.

- RBAC and Spaces bring instant clarity and governance.

- ILM removes 80% of future operational issues.

- Cloud migration is safer when the on-prem cluster is already optimized.

- Upgrading Elasticsearch is not a version change — it’s a platform transformation.

High-performing teams treat upgrades as modernization projects, not version bumps.

11. Final Takeaway

Modernizing Elasticsearch from 6.8 → 8.x is one of the most impactful steps an engineering team can take to improve reliability, performance, and future scalability.

But it requires:

- careful planning

- architectural redesign

- performance tuning

- security hardening

- operational cleanup

- structured testing

Hyperflex’s Elasticsearch Consulting Services support every phase—from preparation to execution to cloud migration—ensuring safe upgrades with no downtime surprises.

Hyperflex helps teams scale Elastic fast—with confidence.

Contact us at info@hyperflex.co to explore how we can support your Elastic journey.

Elasticsearch Consulting New York: Global Expertise, Local Presence with Hyperflex

Power your search infrastructure with Hyperflex’s Elasticsearch Consulting Services in New York. Our Elastic-certified engineers design, secure, and scale clusters for finance, media, and enterprise workloads - delivering faster performance, higher reliability, and 24/7 global support. Why this works: Includes top SEO and LinkedIn keywords (Elasticsearch Consulting Services in New York, Elastic-certified engineers).

Introduction

Data never sleeps — and in New York, neither can your search infrastructure. Whether you’re analyzing trades in real time or managing millions of transactions a day, Elasticsearch sits at the heart of how modern enterprises make decisions.

At Hyperflex, we provide Elasticsearch Consulting Services in New York — and across the world — combining certified engineering expertise with real-world delivery excellence.

Hyperflex helps enterprises to SMBs deploy, secure, and scale Elasticsearch with precision — faster, safer, and smarter.

Our New York office enables in-person collaboration and technical workshops, while our global delivery centers provide 24/7 coverage for enterprises operating across time zones. Whether you run Elastic on-prem, in Elastic Cloud, or in a hybrid model, we help you architect, secure, and scale Elasticsearch with confidence.

Why Elasticsearch Matters — in New York and Beyond

New York runs on data. From finance to media, organizations depend on Elasticsearch to process, analyze, and visualize information in milliseconds.

Elastic’s stack — Elasticsearch, Kibana, Beats, and Logstash — transforms raw data into actionable intelligence. It supports:

- Financial services identifying risks through real-time log correlation.

- Digital businesses improving search and discovery experiences.

- Enterprises tracking uptime, metrics, and performance at scale.

- Public institutions ensuring visibility and security compliance.

Yet, Elasticsearch’s power depends on thoughtful design, scaling, and optimization. That’s where Hyperflex Consulting makes the difference.

Our Elasticsearch Consulting Services

1. Elastic Architecture & Deployment

We design and implement clusters built for resilience and scalability. From shard strategies and ILM (Index Lifecycle Management) to snapshot automation and data tiering, our architects ensure your Elastic deployment performs efficiently across Elastic Cloud, AWS, Azure, or on-prem infrastructure.

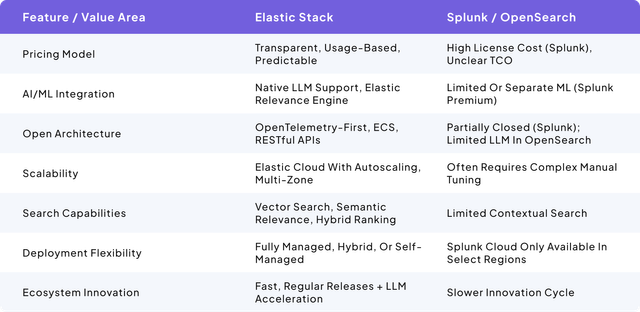

2. Migration & Version Upgrades

We handle Splunk-to-Elastic migrations, Elasticsearch upgrades (7→8→9), and cross-cluster reindexing through proven blue-green strategies. Each migration includes full validation, deprecation checks (GET _migration/deprecations), and rollback protection to guarantee zero disruption.

3. Observability & Security Solutions

We deploy Elastic Observability and Elastic Security to unify logs, metrics, and traces under one view.

Our consultants fine-tune dashboards, alerts, and detection rules to help teams spot issues early and respond fast using Kibana visualizations, machine learning, and ES|QL analytics.

4. Managed Elastic Services (Hyperflex Care)

With Hyperflex Managed Services, you get continuous monitoring, automated tuning, and proactive alerting. We provide monthly health reviews, optimize shard allocation, and maintain balanced data retention policies to ensure long-term performance.

Hyperflex Elastic Solutions

Beyond consulting, Hyperflex has developed automation tools and service packages that reduce risk and increase Elastic ROI.

• Seamless Upgrades Tool

An automation framework for version upgrades (7.x → 8.x → 9.x) — managing snapshot creation, validation, and rollback automatically to minimize downtime.

• Splunk-to-Elastic Migration Tool

A proprietary utility that maps Splunk data models, field types, and pipelines into Elastic — reducing migration time by up to 70% and eliminating manual errors.

• 14/7 Elastic Coverage Packages

Tailored coverage for enterprises that operate nonstop:

- Weekend Coverage: Fast response for production issues.

- Full Holiday Coverage: 24/7 availability during seasonal peaks.

- Premium 24/7 Enterprise: SLA-backed coverage with weekly optimization reviews.

• Elastic Security & SIEM Dashboards

Prebuilt visualizations for real-time visibility into alerts, compliance, and endpoint activity using Elastic Security.

These tools and services empower organizations across industries to achieve high-performing, cost-efficient Elastic environments at global scale.

Training and Enablement

Hyperflex offers hands-on enablement sessions to help teams master Elastic tools and best practices.

We don’t provide generic training — instead, we deliver project-based learning built around your data and environment.

Our enablement programs include:

- On-Site Workshops (NYC Office): Practical sessions with our engineers to teach your team how to manage and monitor Elastic effectively.

- Admin and Analyst Enablement: Focused sessions on index management, Kibana dashboards, alerting, and performance optimization.

- Elastic Security Readiness Sessions: Deep dives into rule creation, anomaly detection, and security monitoring.

- Knowledge Transfer (KT) Programs: Structured handoff so your internal teams can confidently maintain systems post-engagement.

Hyperflex doesn’t just build Elastic — we empower your team to master it.

Hyperflex Training Programs

For teams ready to go deeper, Hyperflex Training Programs deliver private, live, and hands-on training across Elastic, OpenSearch, and Generative AI.

Learn directly from the engineers who design, deploy, and scale production-grade search, observability, and AI systems — across any stack or environment.

Programs are available online or on-site in New York and other global locations.

Explore formats and curriculums at hyperflex.co/training or reach out at training@hyperflex.co.

Why Choose Hyperflex

- Elastic Certified Engineers: Every engagement is led by Elastic-certified professionals with deep delivery experience.

- Global Reach, Local Presence: Our New York office allows in-person consulting, while our global teams ensure 24/7 continuity.

- Enterprise-Ready: Trusted by organizations managing massive, mission-critical data volumes.

- Future-Ready: We align with Elastic’s roadmap — including Vector Search, GenAI, ES|QL, and AIOps.

We don’t just manage Elasticsearch — we engineer performance, scalability, and capability transfer into every deployment.

How We Work

- Discovery & Audit: On-site or remote assessment to identify scaling, performance, and security gaps.

- Architecture & Implementation: Cluster design, deployment, and integration of pipelines, dashboards, and data tiers.

- Optimization & Enablement: Query profiling, shard optimization, and hands-on sessions to ensure long-term success.

- Continuous Support: Managed monitoring, health checks, and performance reviews through Hyperflex Care.

Engineering Insight: Scaling Elastic the Right Way

Performance isn’t about bigger clusters — it’s about smarter architecture. Our engineers rely on Elastic APIs to detect bottlenecks before they cause downtime.

Example checks:

GET _cluster/stats

GET _cat/indices?v&s=store.size:desc

GET _nodes/hot_threads

By combining Elastic’s native telemetry with proprietary diagnostic scripts, Hyperflex identifies hot nodes, storage imbalances, and query inefficiencies long before they affect end users.

FAQ

Q1: Do you only work in New York?

No. While our office is in New York City, Hyperflex delivers Elastic consulting, managed services, and training globally — across North America, Europe, and APAC.

Q2: Do you offer official Elastic training?

We don’t sell standard vendor training. Instead, we provide private, live programs designed around your environment — covering Elastic, OpenSearch, and GenAI.

Q3: Do you support both Elastic Cloud and on-prem setups?

Yes. We work across Elastic Cloud, AWS OpenSearch, and self-managed clusters, adapting to your compliance and performance needs.

Final Takeaway

Whether your operations are in New York, London, Singapore, or Dubai — Hyperflex delivers trusted Elasticsearch Consulting, backed by hands-on enablement and world-class training.

Our NYC consultants are available for in-person sessions and executive workshops, while our global team ensures nonstop Elastic performance and scalability.

Contact us: info@hyperflex.co

Hyperflex helps teams scale Elastic fast—with confidence. Contact us to explore how we can support your Elastic journey.

Why IoT Companies Need Expert Elasticsearch Consulting for Real-Time Data Validation

IoT leaders rely on Elasticsearch to process billions of sensor events in real time — but scaling it requires expert validation and architecture. Learn how Hyperflex’s Elastic-certified engineers help industrial and smart-device teams secure, optimize, and validate their data for 24/7 reliability.

1. Introduction: When Real-Time Data Becomes a Competitive Edge

In the Internet of Things (IoT) world, data isn’t just a byproduct, it’s the heartbeat of operations. From smart meters and industrial sensors to connected manufacturing lines, companies depend on real-time visibility to make split-second decisions.

But collecting data is only the beginning.

The real challenge is validating, indexing, and analyzing billions of data points from diverse devices without compromising performance, accuracy, or cost efficiency.

That’s where Elasticsearch consulting for IoT comes in.

At Hyperflex, we help engineering teams, architects, and operations leaders ensure their Elastic deployments deliver reliable insights at scale.

2. Why Elasticsearch Has Become the Heart of IoT Infrastructure

Elasticsearch has become the de facto backbone for IoT data because of its unmatched ability to ingest, search, and visualize massive volumes of telemetry in real time.

For IoT organizations, Elasticsearch provides:

- Scalable ingestion pipelines for millions of events per minute

- Flexible schema for evolving sensor data

- High-speed search and filtering for instant insights

- Integration with Kibana for intuitive, visual monitoring

Yet even the most advanced IoT environments face recurring architectural questions:

- Are our indices optimized for time-series data?

- Can our clusters handle 10x growth without downtime?

- How do we ensure accuracy in dashboards that executives rely on?

That’s where an architectural validation and oversight service becomes essential.

3. The Architect’s Role: Oversight, Validation, and Confidence

In complex IoT ecosystems, architects act as both advisors and gatekeepers.

Their role isn’t limited to deployment, it’s about ensuring that every index, dashboard, and integration aligns with long-term goals.

A typical Elastic architect engagement includes:

- Architecture review: Validating cluster design, sizing, and ILM strategy

- Data flow validation: Ensuring ingestion pipelines are resilient and optimized

- Security and governance: Applying index-level access, auditing, and data retention policies

- Performance validation: Analyzing query latency, shard allocation, and node utilization

- Scalability oversight: Anticipating how the system will perform at 5x or 10x scale

For decision-makers, this isn’t just technical due diligence, it’s business risk management.

A single oversight in shard design or pipeline logic can cost millions in lost visibility or operational downtime.

4. Lessons from Industrial IoT Success Stories

Across industries, IoT pioneers are setting benchmarks in real-time analytics.

For instance, companies in smart metering, water management, and industrial automation have used Elastic’s Professional Services to validate and scale their data infrastructure.

The key takeaway?

Validation saves cost and builds trust.

By engaging Elastic experts early, these firms ensured:

- Continuous uptime during data surges

- Unified monitoring across devices

- Proactive issue detection using Elastic Observability

- Long-term architectural stability

Hyperflex brings that same level of rigor without the limitations of a one-time engagement.

5. How Hyperflex Delivers Strategic Elasticsearch Consulting for IoT

At Hyperflex, we specialize in end-to-end Elasticsearch consulting for IoT, combining architecture, validation, and operational support.

Our Elastic-certified engineers act as trusted advisors to your internal teams, reviewing configurations, validating architecture, and optimizing ingestion pipelines for real-world performance.

Our Core IoT Consulting Capabilities

- Architecture Validation & Oversight: Review data design, index patterns, and query logic.

- Performance Optimization: Benchmark latency, improve query speed, and tune cluster resources.

- Scalability Planning: Model your Elastic growth trajectory and design for scale.

- Monitoring & Alerting Setup: Build Kibana dashboards and threshold-based alerts for IoT telemetry.

- Security & Governance: Implement data retention, RBAC, and audit policies that meet compliance standards.

Every engagement is designed for flexibility whether you need a weekend coverage, a one-time 8-hour review, or a 24/7 enterprise-grade plan.

💡 Explore our Elastic Support Plans to learn more about Quick Review, Full Care, and Premium 24/7 coverage options.

6. Extending Elastic’s Professional Services with Hyperflex Expertise

Elastic’s Professional Services have long supported enterprises in designing and scaling data platforms.

However, many organizations need continuous validation, faster onboarding, or cost-flexible advisory models, that’s where Hyperflex steps in.

Hyperflex complements Elastic’s ecosystem by providing:

- Rapid engagement (start within days, not weeks)

- Flexible advisory hours (8h, 40h, or custom retainers)

- Elastic-certified experts available across time zones

- Ongoing architectural validation and optimization beyond deployment

We work alongside Elastic not as competitors but as partners in success to ensure every deployment remains secure, validated, and ready for growth.

7. Final Takeaway: Scale Your Elastic Environment with Confidence

For IoT companies, data is the engine of innovation and Elasticsearch is the control center that keeps it running.

But like any engine, it needs periodic validation, tuning, and expert oversight.

Whether you’re building a smart city network, managing industrial sensors, or scaling edge analytics, Hyperflex provides the Elasticsearch consulting expertise to ensure your systems perform flawlessly.

Hyperflex helps IoT companies scale Elastic with confidence.

Contact us at marketing@hyperflex.co to learn more about our Elasticsearch Consulting Services and 24/7 Support Plans.

The 5 KPIs Elastic Users Ignore — And What It Costs Them

Most teams watch “cluster green” but miss 5 KPIs that truly define Elastic performance, scalability, and cost-efficiency. Learn how Hyperflex measures what matters.

Introduction: When “Working Fine” Isn’t Enough

Most Elastic users only notice performance issues when something breaks, a search slows down, dashboards freeze, or indexing suddenly halts.

At Hyperflex, we often see this pattern: by the time users realize there’s a problem, the underlying KPI has been red for days.

Elastic provides hundreds of observability metrics across the stack; from Beats to Elasticsearch nodes but most teams only monitor surface health:

“Cluster green,” “CPU OK,” “disk stable.”

What they miss are five Elastic Observability KPIs that silently determine whether your cluster is efficient, scalable, and cost-effective.

KPI #1 — Ingest Rate vs. Indexing Latency

Why it matters:

Many teams track data ingest rate but fail to correlate it with indexing latency. When ingest spikes, indexing queues fill up, causing document delays, refresh backlogs, and increased heap usage.

Example:

A fintech client boosted Beats input by 30 % during an audit. Ingest looked fine, but indexing.index_time_in_millis / index_total tripled.

Dashboards lagged and storage grew 20 % from merge overhead.

What it costs:

- Slower time-to-insight during compliance reviews

- Increased storage from reindexing overhead

- Degraded alert accuracy due to late-arriving data

Monitor this:

- _nodes/stats/indexing → derive indexing latency

- Logstash /stats/pipeline → queue depth

- _cat/thread_pool?thread_pool_patterns=write,index,bulk

Consulting tip:

Keep average indexing latency ≈ ≤ 10 % of ingest rate.

If it rises, scale hot nodes or isolate ingest via dedicated pipelines.

KPI #2 — Shard Balance and Memory Pressure

Why it matters:

Unbalanced shards create hidden performance bottlenecks. If one node holds more primary shards than others, it bears the majority of indexing and query load, driving heap pressure and even node restarts.

Example:

An e-commerce client had 250 indices with daily rollovers. ILM didn’t rebalance evenly, and one node carried twice the shard count of others. Searches targeting multi-index patterns slowed by 40%, and cache eviction skyrocketed.

What it costs:

- Oversized hardware and inflated cloud spend

- Reduced query performance and uptime

- Frequent manual maintenance

Monitor this:

- _cat/shards and _cluster/allocation/explain

- _nodes/stats/jvm → heap per node

- Field data / query cache hit ratios

Consulting tip:

Combine ILM rollovers with regular shard audits.

Keep shard sizes ≈ 20–50 GB.

For older indices, use automated rebalancing scripts.

Hyperflex often automates rebalancing scripts for legacy indices to prevent uneven load.

KPI #3 — Search Latency (and Why P99 Matters)

Why it matters:

Average latency can be misleading. A cluster may respond to most queries in 300 ms — but 1% of queries might take 5 seconds. Those are the ones users remember.

Example:

A SaaS company used Elastic for log search. Average latency looked fine (400 ms), but P99 queries spiked to 6 seconds on keyword-heavy dashboards. End users lost confidence in “real-time” observability.

What it costs:

- Loss of end-user trust and productivity

- Longer MTTR (Mean Time to Resolution)

- Poor performance on mission-critical dashboards

Monitor this:

- P95 and P99 latency in APM or Search Profiler

- Slow logs (search.slowlog.threshold.query.warn)

- Query cache utilization trends

Consulting tip:

Always visualize P95–P99 latency next to averages. Use the Elastic Search Profiler to pinpoint heavy fields. Hyperflex tuning often reduces query latency by 30–50% in high-volume clusters.

KPI #4 — Node Health & JVM Memory Trends

Why it matters:

JVM heap usage is the heartbeat of cluster stability. Even when CPU and disk seem fine, growing heap usage can predict crashes during high ingest.

Example:

A security team ignored gradual heap growth during peak Beats ingestion. Garbage collection (GC) cycles rose from 0.3 to 1.5 seconds, leading to multi-minute indexing pauses.

What it costs:

- Missed alerts and false negatives

- Higher downtime risk

- Unnecessary node scaling and cloud cost

Monitor this:

- JVM heap over time (Node Stats API)

- GC count and total collection time

- Heap-to-shard ratio

Consulting tip:

Keep JVM ≤ 75 % utilization.

GC pauses > 1 s mean it’s time to increase heap (≤ 32 GB) or add nodes.

Use dedicated coordinating nodes for heavy query loads.

KPI #5 — Indexing Pressure and Queue Saturation

Why it matters:

Elastic 7.x+ introduces indexing_pressure.memory to reveal heap consumed by active indexing. When ignored, it silently causes backpressure and 429 errors.

Example:

A healthcare provider saw random 429s from Logstash. Root cause: indexing pressure exceeded 70% during burst writes from multiple Filebeat streams.

What it costs:

- Lost or delayed data ingestion

- SLA violations and compliance risks

- Cluster instability during peak hours

Monitor this:

- /indexing_pressure/stats metrics

- Thread pool rejections (_cat/thread_pool)

- Disk I/O saturation during bulk writes

Consulting tip:

Tune bulk request sizes (5–10 MB max) and use ingest nodes for high-volume sources. Hyperflex engineers often build custom auto-throttling scripts that cap Beats throughput when memory pressure nears 65%.

The Business Cost of Ignoring Elastic KPIs

Ignoring these KPIs doesn’t just slow your cluster — it breaks trust in your observability platform.

When dashboards lag or alerts misfire, teams stop relying on Elastic as their “source of truth.”

Hyperflex performance audits show that neglected KPIs can cause:

- 20–30% higher storage and compute costs from poor shard sizing

- Up to 50% longer MTTR when query latency isn’t monitored

- 40% lower indexing efficiency when ingest latency is ignored

Each missed KPI is money left on the table — and time lost during incidents.

💡 Callout Box:

“Observability isn’t just about uptime. It’s about economics — every KPI you ignore adds hidden operational costs.”

Final Takeaway: Observability Is a Business Strategy

Elastic Observability is more than dashboards — it’s a business strategy for performance economics.

Each KPI you ignore becomes a recurring expense:

CPU wasted on rebalancing, engineers fighting preventable latency, or inflated cloud bills from overprovisioning.

At Hyperflex, we help organizations translate Elastic metrics into measurable business value. Our consultants correlate ingest, shard, and JVM KPIs to design right-sized architectures that scale predictably and save money.

Elastic + AI: How RAG Transforms Enterprise Search Experiences

Search is evolving—from finding data to understanding meaning. Learn how Hyperflex integrates Elastic + AI + RAG to create intelligent, context-aware enterprise search that drives real-world results.

Introduction: The New Era of Intelligent Search

Search is no longer about finding; it’s about understanding.

Across every enterprise, teams are rethinking how employees, customers, and systems interact with information.

The question has evolved from “Where is this data?” to “What does this data mean?”

That’s where Elastic + AI enter the picture. Together, they redefine how enterprises discover knowledge, automate workflows, and unlock insights hidden in years of operational data.

At the center of it all lies a transformative framework: Retrieval-Augmented Generation (RAG) powered by Elastic’s Search Relevance Engine (ESRE).

At Hyperflex, we’ve seen this transformation firsthand. Our engineers have guided enterprises across finance, retail, and technology to merge Elastic with Generative AI, creating systems that think contextually and answer intelligently.

In one recent retail deployment, Elastic and AI reduced manual search time by 70% and surfaced insights that previously took analysts hours to uncover. In financial compliance, automated knowledge retrieval improved audit readiness by over 60%, cutting time-to-insight from days to minutes.

Why Enterprises Need AI-Powered Search

Data volume is no longer the challenge; context is.

Enterprises manage petabytes of logs, transactions, and documentation. Yet, 80% of that data remains underutilized because traditional search systems can’t interpret meaning.

AI bridges that gap:

- Language understanding helps search engines interpret natural questions.

- Vector embeddings turn unstructured data into searchable context.

- Generative AI produces human-like responses, grounded in real enterprise data.

The result is a new paradigm: Enterprise Search that explains, not just lists.

Elastic’s Advantage in the GenAI Landscape

Elastic has quietly built one of the most flexible AI-ready infrastructures available.

With vector search, semantic relevance ranking, and native integrations with LLMs, it gives enterprises everything they need to build AI-native applications securely and at scale.

Elastic’s Search Relevance Engine (ESRE) takes this even further. It combines:

- Text expansion, so queries find meaning, not just matches.

- Vector similarity search for contextual recall.

- Reranking models that improve response quality over time.

Together, these features turn Elasticsearch from a data indexer into an intelligent reasoning layer capable of powering real enterprise AI applications.

Inside the RAG Framework with Elastic

How RAG Works in Enterprise Environments

Retrieval-Augmented Generation (RAG) enhances LLMs by grounding their responses in your data.

Instead of generating text from a static model, RAG retrieves context from Elastic indices — policies, logs, documents, or code — and feeds it to the LLM for accurate, trustworthy answers.

Diagram Placeholder: RAG pipeline on Elastic Stack: Index → Vector Store → Retriever → LLM → Response

In practice:

- Data Ingestion & Indexing

- Elastic indexes both structured (tables, configs) and unstructured (PDFs, logs, chat transcripts) content.

- An embedding pipeline converts textual content into dense vector representations using an embedding model (e.g., text-embedding-3-small, BERT, or Elastic’s native model).

- Each document now has both keyword and vector representations, stored in text and dense_vector fields respectively.

- Retrieval Layer (ESRE in Action)

- When a user asks a question, Elastic performs hybrid retrieval:

- BM25 (text-based) search identifies lexically similar content.

- Vector similarity search (via HNSW graph-based ANN) finds semantically similar content even if keywords differ.

- Elastic’s Search Relevance Engine (ESRE) combines these signals, re-ranks results, and produces a set of highly relevant passages.

- When a user asks a question, Elastic performs hybrid retrieval:

- Augmentation & Context Construction

- The retrieved documents or snippets are combined into a structured context payload.

- Elastic’s ESRE API or your middleware layer handles chunking, deduplication, and truncation to fit the LLM’s context window.

- Generation & Response

- The context is passed to an LLM - either Elastic Managed LLM (running within Elastic Cloud) or an external model like OpenAI GPT, Anthropic Claude, or Azure OpenAI.

- The LLM generates a natural language answer, grounded in the Elastic-provided context.

- Responses can be pushed directly into Kibana dashboards, APIs, or chat assistants.

It’s fast, scalable, secure and explainable — four qualities enterprises demand most.

What Makes RAG Different from Traditional Search

.png)

RAG doesn’t just find. It reasons.

By embedding enterprise data and enabling context-aware generation, Elastic turns your search layer into a knowledge reasoning engine trusted by security, compliance, and engineering teams alike.

Real-World Results from Hyperflex Webinars

Hyperflex has spent the past year helping enterprises bring these concepts to life.

In our recent webinars — including “Search That Thinks: How RAG and ESRE Elevate GenAI Experiences” — we showcased how Elastic and AI can turn existing infrastructure into cognitive systems.

Our engineers have built real RAG prototypes on the Elastic Stack, integrating OpenAI and Elastic vector search to show how knowledge retrieval can evolve from a query to a conversation.

💡 Hyperflex Insight

Our “Gen AI in Finance powered by Elastic” session revealed how contextual retrieval dramatically improved accuracy in financial compliance searches, reducing false positives by over 60%.

Join our next live session to see Elastic-powered GenAI in action.

hyperflex.co/events

Challenges Enterprises Face (and How Hyperflex Solves Them)

Even with advanced tools, enterprises face three recurring challenges.

1. Data Fragmentation & Latency

Legacy architectures slow retrieval and distort relevance.

Hyperflex helps unify indices and optimize sharding strategies for AI workloads.

2. Cost & Governance of GenAI Systems

Public LLM APIs (e.g., GPT-4, Claude, Gemini) can be expensive and raise governance concerns: unpredictable token costs, data egress, and compliance limitations.

Our engineers deploy hybrid models using Elastic Managed LLM for cost control and privacy.

3. Aligning AI with Existing Workloads

Integrating AI without disrupting observability or security pipelines is critical.

Hyperflex ensures RAG and AI inference stay Elastic-native, not bolted on.

Building the Future with Elastic Managed LLM

Elastic’s next step, Managed LLM, represents a turning point.

It allows enterprises to experiment with AI inside their Elastic environment without complex integrations or model management.

Learn more in Elastic’s announcement of Managed LLM.

Hyperflex believes this is where Elastic and AI truly converge:

- Data remains where it belongs, inside Elastic.

- End-to-end embedding lifecycle management (generation, update, reindex).

- AI insights flow directly into dashboards, alerts, and knowledge assistants.

- Security and compliance stay intact.

- Performance tuning for hybrid queries across structured + vector data.

This isn’t the future of search — it’s the beginning of AI-native enterprise ecosystems built on Elastic.

Our Consulting Approach: Bridging AI and Elastic

At Hyperflex, we don’t just deploy AI features; we align them with real enterprise goals.

Our consulting teams pair Elastic-certified engineers with AI specialists to accelerate adoption while keeping architectures secure, cost-efficient, and future-proof.

We guide clients through the full lifecycle — from RAG architecture design and embedding pipelines to observability tuning and Elastic-native governance — ensuring every solution delivers measurable value.

Final Takeaway: The Human Edge in AI-Driven Search

AI can retrieve and reason, but humans still define relevance.

The most successful enterprises will be those that blend Elastic’s data power with human curiosity, guided by teams who understand both technology and meaning.

At Hyperflex, that’s our mission: to bridge data and intelligence, one search at a time. We don’t just integrate Elastic and AI — we help enterprises reimagine what search can become.

How to Fix Elasticsearch ILM Rollover Alias Errors [Step-by-Step Guide]

Resolve Elasticsearch ILM alias errors with Hyperflex’s expert guide to stable rollovers and upgrades.

Elasticsearch’s ILM (Index Lifecycle Management) is essential for automating time-series data, but misconfigured aliases can trigger frustrating errors like “index.lifecycle.rollover_alias does not point to index”. For teams on Elasticsearch 6.8 (EOL), resolving this quickly is critical to avoid security risks and upgrade blockers.

This guide combines basic fixes and advanced engineering steps to solve ILM errors permanently.

Step 1: Identify the Active Write Index

Run this API call to find which index is the current write target:

bash

Copy

GET _cat/aliases/your_alias?v&h=alias,index,is_write_index

Only the index with is_write_index: true is valid for rollovers.

Real-World Example:

A Hyperflex client’s alias 77d281fd-...interactions pointed to 9 indices, but only one had is_write_index: true.

Step 2: Clean Up Conflicting Aliases

Update the alias to remove invalid indices and lock the correct write index:

json

Copy

POST /_aliases

{

"actions": [ { "remove": { "index": "old_index_1", "alias": "your_alias" }},

{ "add": { "index": "correct_index", "alias": "your_alias", "is_write_index": true }} ]

}

Step 3: Retry the ILM Policy

Force ILM to reprocess the index:

bash

Copy

POST /correct_index/_ilm/retry

Step 4: Check for Legacy Index Templates (Engineer’s Step)

Outdated index templates may auto-generate indices with broken aliases. Audit templates with:

bash

Copy

GET _index_template/*your_template*

Delete or update templates causing conflicts.

Pro Tip: Let Hyperflex audit your templates during upgrades.

Step 5: Verify ILM Policy Conditions

Ensure your policy’s rollover thresholds (e.g., max_size: 50gb) align with actual index sizes:

bash

Copy

GET _ilm/policy/your_policy

Adjust criteria if indices never trigger rollovers.

Pro Tip: Optimize ILM policies for cost efficiency.

Step 6: Force a Manual Rollover (Engineer’s Step)

If ILM remains stuck, manually trigger a rollover:

bash

Copy

POST /your_alias/_rollover

{

"conditions": { "max_age": "30d" }

}

This creates a new index (e.g., ...-000015) and shifts the alias.

Pro Tip: Let us manage ILM for hands-free automation.

Step 7: Confirm the Fix

Validate with the ILM Explain API:

bash

Copy

GET */_ilm/explain

FAQ: Elasticsearch ILM & Aliases

Q: How do I check alias health?

A: Use GET _cat/aliases/your_alias—only one index should have is_write_index: true.

Q: Can I fix ILM without downtime?

A: Yes! Follow Steps 1-7 for zero disruption.

Need Expert Help? Hyperfix Your Elasticsearch Cluster

Stuck with ILM errors or EOL upgrades? Hyperflex delivers:

✅ Zero-Downtime Upgrades to Elasticsearch 7.x/8.x.

✅ ILM Policy Audits to prevent rollover failures.

✅ 24/7 Managed Elasticsearch for mission-critical clusters.

10 Performance Tuning Tips for Elasticsearch

Optimize Elasticsearch with Hyperflex’s proven tips for faster queries, better indexing, and scalability in any industry.

Introduction

Whether you're handling logs, search, metrics, or security data, Elasticsearch is powerful—but only when tuned right. At Hyperflex, we work across industries helping engineers build fast, stable, and scalable Elastic clusters. This guide distills our most effective, field-tested performance tips. Each one is backed by real-world consulting experience across banking, healthcare, insurance, and retail.

Use these tips to:

- Speed up queries

- Reduce heap pressure

- Improve indexing throughput

- Avoid scaling bottlenecks

1. Size Shards Based on Data

Don't just use default 1 shards. Right-sizing your shards can drastically improve performance.

Hyperflex Insight: We helped a healthcare provider reduce query latency by 65% just by moving from 6 shards to 2 for 30GB indices. The result: faster access to patient records during emergency care.

Guideline:

- Keep shards between 10–50 GB

- Use the _shrink or _reindex API for cleanup

2. Reduce Segment Count with Force Merge

Too many segments? Elasticsearch spends more time merging than serving queries.

Retail Example: A clothing e-commerce site saw a 40% drop in CPU load on its search nodes by running _forcemerge after major inventory uploads.

Use:

POST /my-index/_forcemerge?max_num_segments=1

Run after bulk ingestion or reindexing.

3. Increase Refresh Interval for Heavy Write Loads

The default refresh_interval is 1s. That’s too frequent for bulk indexing.

Insurance Example: One insurance company increased ingestion speed by 3x during policy batch imports by temporarily setting refresh_interval to 60s.

PUT /my-index/_settings

{

"refresh_interval": "60s"

}

Reset after the job completes.

4. Use Doc Values and Disable Norms When Possible

- Doc values = faster sorting, aggregations

- Norms = needed only for scoring; disable for keyword fields

Banking Example: A major bank disabled norms on all transaction fields and improved dashboard response time by 30%.

"title": {

"type": "text",

"norms": false

}

5. Filter First, Score Later

Use filter context for clauses that don’t affect scoring (e.g., date, status).

Retail Use Case: In a large product catalog, filtering by category and availability before matching improved search responsiveness during flash sales.

6. Cache Smartly with Filter Context

Elasticsearch caches filter results. Use filter for repeat queries.

Healthcare Example: A diagnostics platform reduced recurring query times for lab results by caching test type and patient group filters.

Don’t:

"must": [

{ "term": { "status": "error" } }

]

Do:

"filter": [

{ "term": { "status": "error" } }

]

7. Use Bulk API for High Write Throughput

Indexing one document at a time is inefficient.

Insurance Example: Processing claims required indexing 5 million documents daily. Switching to bulk reduced ingestion time from 6 hours to 45 minutes.

POST /_bulk

{ "index": { "_index": "logs" } }

{ "message": "hello" }

{ "index": { "_index": "logs" } }

{ "message": "world" }

Batch 500-1000 docs per request.

8. Monitor Heap and GC Overhead

Use the _nodes/stats/jvm API to track memory pressure.

Guideline:

- Keep heap usage below 50%

- Use up to 30.5GB heap for compressed object pointers

Retail Example: A marketplace scaled out ingest nodes after noticing excessive GC during peak sale days.

9. Avoid Wildcards and Leading Regex

These kill performance:

{ "wildcard": { "name": "*john*" } }

Use edge_ngram, prefix, or search_as_you_type fields instead.

Banking Example: Replacing wildcard queries with search_as_you_type reduced autocomplete lag on customer portals.

10. Profile and Benchmark Everything

Use:

GET /my-index/_search/profile

Identify slow steps in your query pipeline.

Also use Rally (https://esrally.readthedocs.io) to simulate performance scenarios.

Hyperflex Tip: We built custom Rally tracks for a retail analytics client to simulate 10K QPS and test reindexing strategies before launching in production.

11. Tune JVM Heap Memory

Elasticsearch relies heavily on JVM memory tuning. Set the heap to ~50% of system RAM, max 30.5–32GB.

ES_JAVA_OPTS="-Xms16g -Xmx16g"

Tip: Avoid swapping; use mlockall or its modern replacement to lock memory. Another option available on Linux systems is to ensure that the sysctl value vm.swappiness is set to 1. This reduces the kernel’s tendency to swap and should not lead to swapping under normal circumstances, while still allowing the whole system to swap in emergency conditions.

Monitor with:

GET _nodes/stats/jvm

Hyperflex Example: An insurance company reduced GC pauses by optimizing heap settings on ingestion-heavy nodes, improving SLA compliance.

12. Use Index Templates and ILM

Ensure consistent index management with templates and ILM policies.

Template Setup:

PUT _index_template/logs_template

ILM Example:

PUT _ilm/policy/hot-warm-delete-policy

Retail Use Case: ILM automation helped a fashion retailer manage log retention during peak sales, reducing manual ops overhead.

Final Takeaway

Tuning Elasticsearch isn't about flipping magic switches. It’s about understanding trade-offs, testing changes, and optimizing for your use case.

Hyperflex helps engineers across banking, healthcare, insurance, and retail optimize and scale Elasticsearch clusters with confidence.

Need help? We offer Elasticsearch Consulting Services tailored to your performance goals. Contact us at marketing@hyperflex.co.

Troubleshooting Hot Node Disk Issues in Elastic: When Usage Doesn’t Add Up

Learn to diagnose and prevent Elasticsearch hot node disk spikes with Hyperflex’s expert tips on ILM and cluster health.

Introduction

When managing large Elasticsearch clusters, sudden disk usage spikes on individual hot nodes can pose serious risks—from degraded performance to total write-block scenarios. The challenge? These issues often remain invisible in the cluster health API, yet threaten cluster stability.

This blog walks you through a representative real-world Elastic issue involving unexpected hot node disk growth—uncovering misaligned ILM behavior, lingering index data, and practical mitigation strategies.

The Hot Node Disk Dilemma

Elastic's architecture relies on tiered storage—hot, warm, cold and frozen—designed to optimize performance and cost. But what happens when a hot node shows 90%+ disk usage, while peers hover at 35%?

Even more concerning: the number of shards looks balanced, ILM seems correctly applied, and the cluster is green. So, what’s wrong?

Common Root Causes Behind Unexplained Disk Spikes

Unexpected hot node disk usage is usually a symptom of deeper architectural or operational issues:

🟠Stale Index Data: Residual files not deleted after shard migration

🟠 ILM Rollover Delays: Indices exceeding max_primary_shard_size before rollovers occur

🟠 Searchable Snapshot Residues: Snapshots incorrectly left in the hot tier

🟠 Shard Lock or Merge Conflicts: Blocking cleanup tasks

🟠 Manual Overrides or API Misuse: Human errors causing stuck data

Case Study Breakdown: Diagnosis Without Red Flags

In this scenario:

- The cluster is green

- No shards are unassigned

- ILM policies are applied

- Yet, one node is using 3x more disk space than its peers

A deeper look reveals:

🔍 Residual Data Still on Disk

du/df output shows hundreds of gigabytes in index folders that no longer have assigned shards.

$ du -sh /var/lib/elasticsearch/nodes/0/indices/*

104G ./irjtyvmUT7mx2gaa-jnvfQ ← index already moved to warm

81G ./GiGP1vSTTZup39l1fo5cVg ← snapshot or orphaned

These indices had successfully rolled over, but their physical data wasn’t cleared from the hot node disk.

Technical Walkthrough: How to Investigate

Here’s how to get to the root of such issues:

1. Check Disk Usage Consistency

GET _cat/allocation?v&h=shards,disk.indices,disk.used,node

Compare disk.indices vs disk.used. A large discrepancy = possible leftover data.

2. Identify Orphaned Index Folders

du -sh /var/lib/elasticsearch/nodes/0/indices/* | sort -h

3. Use ILM Explain

GET <index-name>/_ilm/explain

Check if the index is truly in a later phase (warm/frozen) and why it hasn’t rolled over.

4. Review ILM Policies

Ensure max_primary_shard_size is enforced properly. Note that rollovers only happen after ILM polling—by default every 10 minutes.

5. Cluster Routing Workarounds

To temporarily rebalance:

PUT _cluster/settings

{

"persistent": {

"cluster.routing.allocation.exclude._name": "problem-node-name"

}

}

Or use disk-based allocation thresholds:

"cluster.routing.allocation.disk.watermark.low": "75%",

"cluster.routing.allocation.disk.watermark.high": "85%",

"cluster.routing.allocation.disk.watermark.flood_stage": "95%"

Preventative Tactics and Configurations

To prevent such disk anomalies:

✅ Set max_headroom values to maintain breathing room

✅ Reduce ILM polling interval if ingesting heavy data (e.g., from security tools)

✅ Audit du/df regularly on hot nodes

✅ Monitor shard size vs policy limits

✅ Avoid manual index movements that bypass ILM

How Hyperflex Helps: Elastic Expertise on Demand

At Hyperflex, we’ve helped dozens of Elastic users and teams diagnose and resolve cluster-level issues like this.

We specialize in:

- 🔧 ILM audits & policy enforcement

- 🔄 Hot/warm/cold/frozen tier optimization

- 🚑 Emergency troubleshooting

- 📈 Shard sizing strategies

- 🛡️ Ongoing Elasticsearch Consulting Services

Need expert help diagnosing your cluster? We’re just a call away.

Final Takeaway

Disk usage issues on hot nodes often require deeper analysis than surface-level cluster health stats. They can be caused by leftover index folders, delayed rollovers, or operational blind spots.

With the right tools and experience, these problems are fixable—and preventable.

Hyperflex helps teams scale Elastic fast—with confidence.

Contact us to explore how we can support your Elastic journey.

Is Elasticsearch a Database? What It Is, What It Isn’t, and How to Use It Properly

Explore if Elasticsearch is a database and how Hyperflex optimizes it for search, analytics, and security in this expert guide.

Introduction

"Is Elasticsearch a database?" It's a question we hear from engineers, architects, and even CIOs regularly—especially when they’re exploring Elastic for logging, security, or real-time analytics.

The short answer? It depends on how you're planning to use it.

At Hyperflex, we offer specialized Elasticsearch Consulting Services to help organizations deploy Elastic effectively and avoid common pitfalls—especially when Elastic is used like a traditional database without the guardrails of proper planning.

What Is Elasticsearch, Technically?

Elasticsearch is an open-source, distributed search and analytics engine. It’s built on top of Apache Lucene and is best known for its ability to:

- Perform full-text search at massive scale

- Index semi-structured JSON documents

- Provide near real-time analytics on diverse data sources

It is a core part of the Elastic Stack, which includes Logstash, Kibana, and Beats. Together, they support logging, observability, SIEM, and search applications.

Is Elasticsearch a Database?

Technically yes—but not in the traditional sense.

Elasticsearch is a NoSQL datastore optimized for search and analytics, not transactional integrity or relational joins.

✅ Why You Could Call Elasticsearch a Database:

- It stores and indexes structured and unstructured data

- It allows CRUD operations via REST APIs

- It supports queries, filters, and aggregations like a query engine

🚫 Why It’s Not a Traditional Database:

- No built-in ACID guarantees for multi-document transactions

- Doesn’t support SQL-style joins (without limitations)

- Not ideal for OLTP (online transaction processing)

- Doesn’t manage relational schemas

Key Takeaway: Elasticsearch is a search-optimized document store, not a general-purpose transactional RDBMS.

When You Should Use Elasticsearch

Elasticsearch excels in use cases that involve high-speed querying, filtering, and aggregating large volumes of data:

🔍 Full-Text Search

Ideal for applications like:

- E-commerce product catalogs

- Job search engines

- Knowledge bases

📊 Observability

Log and trace ingestion at scale with Elastic Observability:

- Real-time infrastructure monitoring

- APM (Application Performance Monitoring)

- Custom dashboards with Kibana

🛡️ Security & SIEM

Elastic Security offers:

- Scalable log ingestion from endpoints, firewalls, and apps

- Real-time correlation and alerting

- Threat hunting and anomaly detection

These are all examples where using Elasticsearch as a search engine with database features delivers incredible value.

When You Shouldn't Use Elasticsearch

Many teams misuse Elastic as a primary system-of-record database. Here’s when you should avoid it:

- You need strict transactional integrity (ACID): Choose PostgreSQL or MySQL.

- Heavy relational joins are required: Use a relational database.

- Real-time writes + frequent updates: Document updates in Elastic are expensive—each update rewrites the whole document.

- Cost sensitivity: Elasticsearch’s resource needs can become expensive without careful index management and lifecycle policies.

Elastic vs Traditional Databases

Best Practices for Elastic Data Storage

If you’re going to use Elastic as your primary data store, make sure to:

✅ Use Index Lifecycle Management (ILM) to control data growth

✅ Avoid frequent document updates—prefer immutability

✅ Right-size your shards to avoid over-sharding (5–50 GB per shard is ideal)

✅ Set up data tiers (hot-warm-cold) for storage optimization

✅ Use Snapshot and Restore for backup strategies

✅ Always monitor resource consumption with Kibana dashboards

Need help doing that? Our team at Hyperflex helps clients set this up the right way through our Elasticsearch Consulting Services.

Conclusion: Use the Right Tool the Right Way

Elasticsearch is a powerful tool—but it’s not a silver bullet. Treating it like a traditional relational database can lead to performance bottlenecks, data loss, and cost overruns.

But when Elastic is used correctly—for real-time analytics, security, and scalable search—there’s nothing quite like it.

Hyperflex helps teams scale Elastic fast—with confidence. Contact us to explore how we can support your Elastic journey.

Elastic Solutions for Healthcare: Enhancing Data Search, Security, and Real-Time Insights

Learn how Hyperflex leverages Elastic Stack to enhance healthcare data management, patient monitoring, and compliance with real-time search and analytics.

Introduction

The healthcare industry is generating vast amounts of data, from electronic health records to real-time patient monitoring and medical research. Managing, searching, and securing this data efficiently while maintaining compliance is essential for hospitals, research institutions, and healthcare providers.

At Hyperflex, we help healthcare organizations leverage Elastic Stack to improve data accessibility, security, and operational efficiency. This article explores how Elastic solutions optimize healthcare workflows, enhance patient care, and strengthen compliance measures.

Why Healthcare Organizations Choose Elastic Stack

Healthcare institutions require reliable data processing to manage increasing complexity. Elastic Stack provides real-time search, analytics, and monitoring capabilities, making it an ideal choice for:

✔ Instant access to patient data and medical histories

✔ Compliance with healthcare regulations through built-in security features

✔ Real-time monitoring of patient vitals and early detection of anomalies

✔ Scalable infrastructure to support growing data needs

✔ Improved processing of medical research and clinical trials

Organizations like UCLA Health have adopted Elastic solutions to enhance searchability within patient records and optimize clinical workflows.

Key Use Cases of Elastic Stack in Healthcare

Optimizing Electronic Health Records Search & Patient Data Management

The Challenge

Hospitals and clinics often struggle with fragmented systems, making it difficult for medical professionals to quickly retrieve patient records. Delays in accessing critical information can impact patient outcomes and diagnostics.

Elastic Solution

- Indexing of millions of records to enable instant search

- Dashboards that provide real-time visualization of patient histories

- Data ingestion and processing from multiple healthcare applications

Industry Example: UCLA Health

UCLA Health developed a custom tool using Elasticsearch, AngularJS, HTML5, and Microsoft .NET to index pathology cases, clinical notes, lab results, and patient histories. This system allows clinicians to retrieve patient records within seconds, improving diagnosis and treatment accuracy.

Real-Time Patient Monitoring & Predictive Analytics

The Challenge

Hospitals need real-time monitoring to detect early signs of critical conditions. Traditional manual monitoring increases response times and can lead to life-threatening delays.

Elastic Solution

- Collection of patient vitals in real-time from monitoring devices

- Anomaly detection to flag potential health risks

- Dashboards that display live patient data for medical teams

Use Case: Early Sepsis Detection

Hospitals using Elasticsearch for anomaly detection have successfully identified early sepsis indicators, allowing doctors to intervene sooner and improve patient outcomes.

Healthcare Security, Compliance & Log Monitoring

The Challenge

Protecting sensitive patient data is critical, as healthcare organizations must comply with regulations while defending against cybersecurity threats.

Elastic Solution

- Role-based access controls and encryption

- Threat detection and security monitoring

- Logging and tracking for compliance auditing

Industry Example: NHS & Healthcare Cybersecurity

The National Health Service in the UK implemented Elastic Stack to monitor security logs, detect data breaches, and ensure regulatory compliance, preventing unauthorized access to patient data.

Medical Research & Accelerating Drug Discovery

The Challenge

Medical researchers and pharmaceutical companies deal with vast amounts of data from clinical trials, genomic research, and biomedical imaging. Extracting insights requires fast search capabilities and advanced analytics.

Elastic Solution

- Processing of large research datasets and publications

- Dashboards displaying trends and insights for researchers

- Fast access to data without performance bottlenecks

Use Case: Influence Health

Influence Health transitioned from SQL to Elasticsearch, reducing query times from hours to milliseconds and enabling faster analysis of patient treatment data and research findings.

Why Hyperflex? Your Trusted Elastic Partner for Healthcare Solutions

Hyperflex is a certified Elastic Partner with a team of certified Elastic engineers specializing in healthcare data search, security, and compliance.

Our Expertise Includes:

✔ Data migration and integration with no downtime

✔ Secure and regulatory-compliant Elastic deployments

✔ Real-time patient monitoring and security solutions

✔ Performance optimization and version upgrades for Elastic environments

📞Contact us today for a consultation.

Conclusion

Elastic Stack is transforming how healthcare organizations manage data. From electronic health records and patient monitoring to medical research and security, Elastic provides a scalable and efficient solution for healthcare providers worldwide.

Hyperflex delivers expert consulting, implementation, and long-term support for healthcare-focused Elastic deployments.

📞 Talk to an Elastic healthcare expert today

Hyperflex: The Elastic Partner That Brings Performance, Flexibility, and Security

When people hear the term Hyperflex, they often associate it with premium wetsuits built for surfers and divers who need flexibility, durability, and performance in extreme conditions. However, Hyperflex.co is an entirely different kind of game-changer—one that brings unmatched flexibility and security to search, observability, and security through Elastic.

If you’re searching for Hyperflex Wetsuits but stumbled upon Hyperflex.co, you’re about to discover a new kind of flexibility—one that helps businesses optimize their search and security solutions with Elastic’s advanced technologies.

What is Hyperflex? A Tale of Two Industries

There are two main Hyperflex brands dominating search results:

- Hyperflex Wetsuits – Known for high-performance surf gear, wetsuits, and water-resistant boots.

- Hyperflex.co – A certified Elastic consulting partner, providing search, observability, and security solutions for businesses in industries like finance, healthcare, and e-commerce.

While the two operate in different worlds, they share a common mission: delivering flexibility and performance in challenging environments.

Elastic Solutions: The "Wetsuit" for Your Data Performance

Just as Hyperflex Wetsuits protect athletes from harsh ocean conditions, Hyperflex.co ensures businesses have a secure, high-performance data infrastructure.

Here’s how Hyperflex.co’s Elastic consulting services bring flexibility and resilience to businesses:

- Elastic Observability: See Everything, Just Like a Surfer Reads the Waves Just like surfers rely on wetsuits to stay warm and agile, businesses rely on Elastic Observability to track logs, metrics, and traces across their IT infrastructure. Hyperflex helps integrate, monitor, and optimize Elastic Observability, making sure businesses react fast to performance issues before they become major problems.

- Elastic Search: The Performance Fit for Your Data Surfers need a perfect wetsuit fit, and businesses need search solutions that fit their unique needs. Hyperflex’s Elastic Search expertise enables organizations to quickly retrieve relevant data, making sure employees and customers find what they need instantly.

- Security with Elastic SIEM: Protection Against the Elements Just like Hyperflex Wetsuits protect surfers from freezing waters, Hyperflex’s Elastic Security solutions protect businesses from cyber threats. With SIEM (Security Information and Event Management), Hyperflex provides real-time threat detection and response, ensuring data remains safe and secure.

Hyperflex Pricing: Tailored Elastic Packages for Every Business

Unlike wetsuits, Elastic solutions don’t come in Small, Medium, or Large, but Hyperflex.co tailors its Elastic services to fit the needs of businesses across industries.

Industries We Serve

- Healthcare – Secure and scalable search solutions for medical records.

- Finance – Real-time observability for fraud detection and transaction monitoring.

- E-commerce – Blazing-fast search capabilities for personalized user experiences.

- Education – Data analytics and search optimization for digital learning platforms.

With flexible pricing models, Hyperflex ensures every business gets the right fit—just like a premium wetsuit.

Why Choose Hyperflex for Elasticsearch?

- Certified Elastic Partner – Trusted expertise in Elastic consulting.

- Industry-Specific Solutions – Tailored services for finance, healthcare, and more.

- End-to-End Support – From setup to scaling, monitoring, and securing Elasticsearch deployments.

Just like a Hyperflex Wetsuit is built to withstand tough ocean conditions, Hyperflex.co is built to ensure your data infrastructure withstands cyber threats, downtime, and performance bottlenecks.

Final Thoughts: Two Hyperflex Brands, One Common Goal

Whether you're looking for a Hyperflex Wetsuit to take on the waves or Hyperflex.co’s Elastic solutions to take on enterprise challenges, performance, flexibility, and security are at the heart of both brands.

Looking for expert Elastic consulting? Contact Hyperflex.co today: marketing@hyperflex.co.

Still searching for wetsuits? Visit hyperflexusa.com for the latest in surf gear.

Setting Up an Elasticsearch Cluster: Common Issues and How to Fix Them

Struggling to add a node to your Elasticsearch cluster? Learn how Hyperflex helps teams troubleshoot cluster formation, TLS configs, and more for reliable setup.

Elasticsearch is a powerful distributed search and analytics engine, but setting up a multi-node cluster can be tricky—especially when dealing with configuration errors, node discovery issues, and security settings.

At Hyperflex, we specialize in helping businesses deploy and optimize Elasticsearch for high performance, security, and scalability. In this post, we’ll walk through some common issues faced when adding a node to an Elasticsearch cluster, troubleshooting steps, and best practices to ensure a seamless setup.

1. Understanding Elasticsearch Cluster Formation

An Elasticsearch cluster consists of multiple nodes that work together to handle search and indexing requests efficiently.

- Master Node: Oversees cluster management and node coordination.

- Data Nodes: Store and process data.

- Ingest Nodes: Pre-process incoming data before indexing.

For a successful cluster setup, nodes must properly discover each other, and configuration settings must be correctly defined.

2. Common Issue: New Node Cannot Join the Cluster

A typical problem occurs when a new node fails to join the existing cluster, leading to errors like: